The universal horror: The sound of your own voice

Have you ever heard a recording of your own voice? If your immediate reaction was “That sounds awful,” don’t worry—you are in the vast majority. In fact, if you type “own voice” into a Japanese Google search, the top suggested co-occurring word is “ugly.” Me? I desperately wish my voice sounded like Don LaFontaine, the legendary movie trailer voice actor.

While Mehrabian’s law famously suggests visual information (55%) is more influential than vocal tone (38%), the voice still holds significant, unexpected power. This is why I felt a chill last year when I read about Microsoft’s new AI synthesizer, VALL-E. This AI can simulate a person’s voice from just a three-second sample, read any text in that simulated voice, and even inject emotions like anger or sadness.

To avoid dying of self-hatred (or AI-induced paranoia), we must either improve our voices or, at least, understand the science of what constitutes a “good voice.”

The scientific disadvantage: Japan’s 1500Hz problem

Neuroscience has already clarified one technical definition of a pleasing sound: the frequency range around 3000 Hz. Voice that includes this frequency generally sounds good to the human ear.

Here, Japanese speakers face a slight disadvantage. According to the research of French doctor Alfred Tomatis, the average frequency of the English language is typically over 2000 Hz, while the Japanese language averages under 1500 Hz. We are statistically designed to sound less acoustically “good” than English speakers.

Given this clarity, creating a voice that hits that perfect 3000 Hz mark is trivially easy for AI synthesizers. But here’s the core issue: Does a scientifically “good” voice always feel emotionally “good” to humans?

Imagine C-3PO and R2-D2 suddenly speaking in gorgeously fluent, natural human voices created by VALL-E. Could you accept it? Probably not. Human psychology complicates everything, introducing elements like nostalgia and a profound, cultural appreciation for synthetic sound.

The synthetic heart of Japan: The vocaloid paradox

This is where Japan’s unique culture of Vocaloid (a portmanteau of “vocal” and “android”) comes into play. Vocaloid started as synthesizer software, but it evolved into a music genre starring virtual singers.

The history changed forever in 2007 with the release of Miku Hatsune. Miku is not just a singer; she is an open-source movement. People compose songs for her, share music videos online, and her synthetic voice is now instantly recognizable. Most Japanese fans can easily distinguish Miku’s unique synthetic tone and would be deeply unhappy if she suddenly sounded “human.” We actively demand a voice that sounds obviously synthetic. This is the Vocaloid Paradox: we prefer artificiality when it serves as a unique aesthetic.

Miku’s influence transcends the screen. Her “Hatsune Miku Expo” concert (see image below) tours globally. I once attended her concert, which featured a hologram Miku performing with a live band of human musicians. It was undeniably fun. Her character has since spread everywhere, independently of the software function.

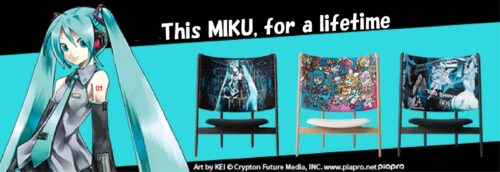

Now, for the official secret: I am writing about Miku so enthusiastically because we have a plan to collaborate with her. Wooden furniture and a virtual pop star—it sounds ridiculous, doesn’t it? But perhaps this collaboration is the perfect fusion of Miku’s future-forward synthetic aesthetic and our timeless, naturally crafted comfort.

I confess that I’d rather listen to a synthetic legend than the ‘ugly’ 1500Hz of my own voice—because in Japan, we’ve mastered the paradox of finding a soul within the artificial. Hatsune Miku isn’t just software; she is a 3000Hz revolution that redefined our culture. Our Hatsune Miku Art Chair is the physical resonance of that sound—a daring collaboration where the high-tech ‘unnatural’ aesthetic of a virtual icon meets the timeless, natural comfort of Hokkaido wood. It’s a seat that doesn’t just hold your body; it vibrates with the future. Now, here is a portal to the ultimate harmony: the image below is your link to the special site. If you prefer the dull, predictable frequencies of the status quo, do NOT click it. But if you’re ready to experience the perfect fusion of a digital heartbeat and a wooden soul, go ahead. Tune in. —— The Hatsune Miku Art Chair.

Shungo Ijima

Global Connector | Reformed Bureaucrat | Professional Over-Thinker

After years of navigating the rigid hallways of Japan’s Ministry of Finance and surviving an MBA, he made a life-changing realization: spreadsheets are soulless, and wood has much better stories to tell.

Currently an Executive at CondeHouse, he travels the world decoding the “hidden DNA” of Japanese culture—though, in his travels, he’s becoming increasingly more skilled at decoding how to find the cheapest hotels than actual cultural mysteries.

He has a peculiar talent for finding deep philosophical meaning in things most people ignore as meaningless (and to be fair, they are often actually meaningless). He doesn’t just sell furniture; he’s on a mission to explain Japan to the world, one intellectually over-analyzed observation at a time. He writes for the curious, the skeptical, and anyone who suspects that a chair might actually be a manifesto in disguise.

Follow his journey as he bridges the gap between high-finance logic and the chaotic art of living!

Comments

List of comments (1)

Blue Techker Awesome! Its genuinely remarkable post, I have got much clear idea regarding from this post . Blue Techker